Table of Contents

- Data Privacy and Protection

- Ethical AI and Bias Mitigation

- Transparency and Explainability

- Accountability and Liability

- Key Regulatory Frameworks

- Practical Steps for Compliance

- Conclusion

Every technological innovation especially the most disruptive ones brings with them great benefits that, however, leave behind shadows. The speed of AI diffusion especially of Large language models has increasingly raised concerns from authorities. Monopolies, data management, privacy, biases, and social impact are just a few points under the magnifying glass of institutions, in this article, we shed some light on what one needs to know to untangle this maze paving the way for transparent and responsible innovation.

Data Privacy and Protection

The first concern is about Data privacy. AI systems often process vast amounts of data, including sensitive personal information, users’ attention to sharing their data is growing so much that some companies like Apple are using privacy to differentiate their products from competitors.

Regulations like the General Data Protection Regulation (GDPR) in the European Union impose strict requirements on data collection, storage, and processing, in recent weeks by this regulation, many European Meta users have been able to deny the platform the ability to use their data for training Meta’s new AI model.

Companies must ensure their AI systems comply with these regulations, non-compliance with data privacy regulations can lead to significant fines and damage to reputation. Companies should implement robust data governance policies and employ data anonymization techniques to protect personal information.

Ethical AI and Bias Mitigation

AI systems can unintentionally perpetuate biases present in training data, leading to unfair or discriminatory outcomes. Regulatory frameworks increasingly emphasize the need for ethical AI practices to ensure fairness and non-discrimination. Businesses must proactively identify and mitigate biases in their AI systems by using diverse datasets and conducting regular audits. With solid technology and data infrastructure, businesses can unlock the real power of AI.

Transparency and Explainability

Transparency in AI decision-making is critical, especially in high-stakes industries like finance, healthcare, and law enforcement. Regulations are pushing for greater explainability, requiring companies to provide clear and understandable explanations of AI processes and decisions. This transparency builds trust and allows for the identification and correction of errors or biases. in the last period much of the research in the AI world has focused on this aspect, succeeding in obtaining good results using increasingly transparent and interpretable models.

Accountability and Liability

As AI systems become more integrated into business operations, questions of accountability and liability become crucial. Regulatory bodies are working to establish clear guidelines on AI accountability, emphasizing the need for human oversight. Companies must implement governance frameworks that define responsibilities and ensure compliance with these standards. Clear accountability structures help prevent legal disputes and enhance operational integrity. Implementing oversight mechanisms ensures responsible AI deployment and builds stakeholder confidence.

Key Regulatory Frameworks

States are dealing with regulation quite differently from each other, no there is no clear convergence yet but the hope is that a common key can be found to prevent the misuse of this technology and at the same time incentivize its use. Understanding key regulatory frameworks is essential for businesses to ensure compliance and strategic alignment.

European Union’s AI Act

The European Union’s AI Act categorizes AI systems into different risk levels, with high-risk systems facing stringent requirements. These include rigorous testing, documentation, and human oversight. Companies operating in the EU must align their AI practices with these regulations to avoid penalties and ensure market access.

United States Approach

In the United States, AI regulation is less centralized, with various federal and state-level initiatives. The Federal Trade Commission (FTC) has issued guidelines emphasizing fairness, transparency, and accountability. Companies must stay informed about these evolving regulations to ensure compliance. Navigating the fragmented regulatory landscape in the US requires continuous monitoring and adaptation. Engaging with legal experts and industry bodies can help businesses stay ahead of regulatory changes.

Global Standards

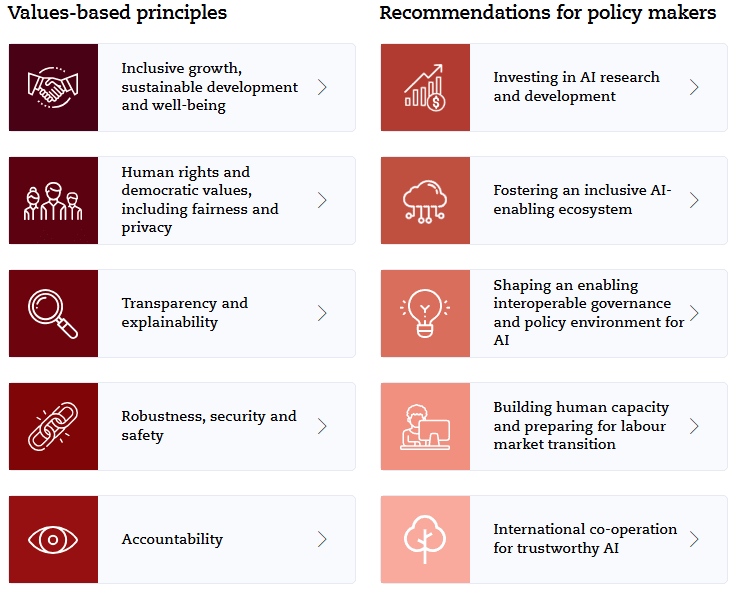

International organizations like the Organisation for Economic Co-operation and Development (OECD) have established AI principles emphasizing inclusive growth, human-centered values, and accountability. Aligning with these global standards can help companies ensure broader compliance and ethical practices.

Practical Steps for Compliance

Navigating AI regulations requires a strategic and proactive approach. Here are practical steps for companies to ensure compliance and foster responsible AI use.

- Conduct Comprehensive Impact Assessments: Before deploying AI systems, conduct thorough impact assessments to identify potential risks and regulatory requirements. Documenting these assessments can demonstrate compliance and guide ethical AI development.

- Implement Strong Governance Frameworks: Establish robust governance frameworks that define clear roles and responsibilities. Implement oversight mechanisms and conduct regular audits to ensure compliance with regulatory standards.

- Foster a Culture of Ethics and Transparency: Promote a culture of ethics and transparency within the organization. Train employees on ethical AI practices and encourage open dialogue about potential risks and challenges. Maintain clear communication with stakeholders about AI processes and decisions.

- Stay Informed and Adapt: The regulatory landscape for AI is continuously evolving. Stay informed about new guidelines and standards by engaging with industry bodies, participating in AI forums, and consulting with legal experts.

- Leverage Technology for Compliance: Use AI-driven tools for data anonymization, explainability, and bias detection to enhance compliance efforts. Investing in these technologies can streamline regulatory adherence and build trust in AI systems.

Conclusion

The regulatory landscape for AI is rapidly evolving, presenting both challenges and opportunities for businesses. Navigating AI regulations can be complex, but Neodata offers expert guidance and innovative solutions to help your business stay compliant and fully leverage AI’s potential. Contact us at info@neodatagroup.ai to learn how we can support your AI journey and ensure your business thrives in the evolving regulatory landscape.

AI Evangelist and Marketing specialist for Neodata

- Diego Arnonehttps://neodatagroup.ai/author/diego/

- Diego Arnonehttps://neodatagroup.ai/author/diego/

- Diego Arnonehttps://neodatagroup.ai/author/diego/

- Diego Arnonehttps://neodatagroup.ai/author/diego/