Table of Contents

- Turning Complexity Into Vision

- Engineering Meaning Through AI

- From Prototype to Real-World Performance

- Refinement, Benchmarking, and Openness

- A New Standard for Responsible Streaming

- The Future of Video Is More Than Visual

Video content is everywhere. Now it’s time to understand what it actually says about the world.

Neodata set out to answer this question with VISDAM – the Video Streaming Deep Analysis Model – a research and innovation project that merges artificial intelligence with social and environmental awareness. Created in collaboration with the University of Messina and funded by the Italian National Recovery and Resilience Plan within the framework of the National Centre for HPC, Big Data and Quantum Computing, VISDAM redefines how we understand and measure the content flowing through our screens.

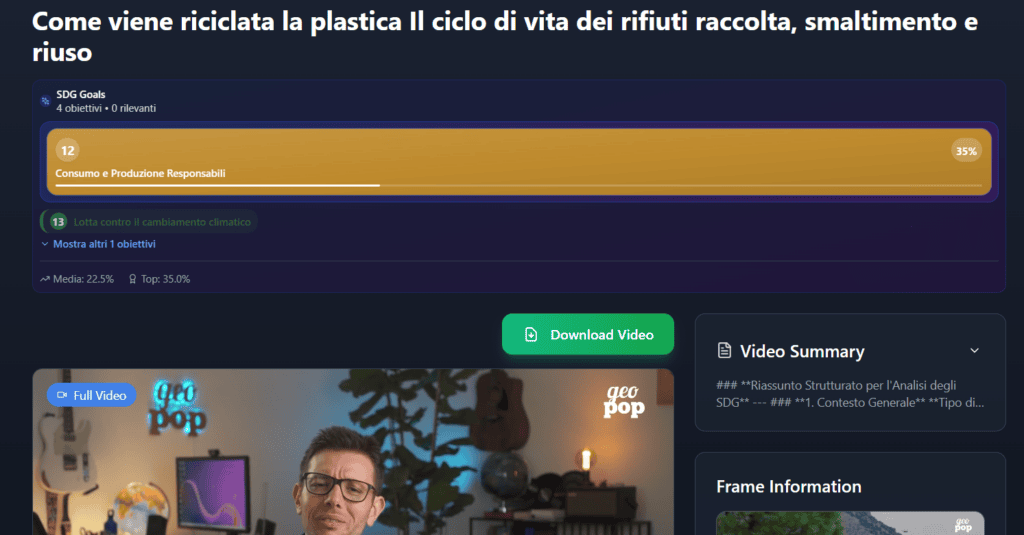

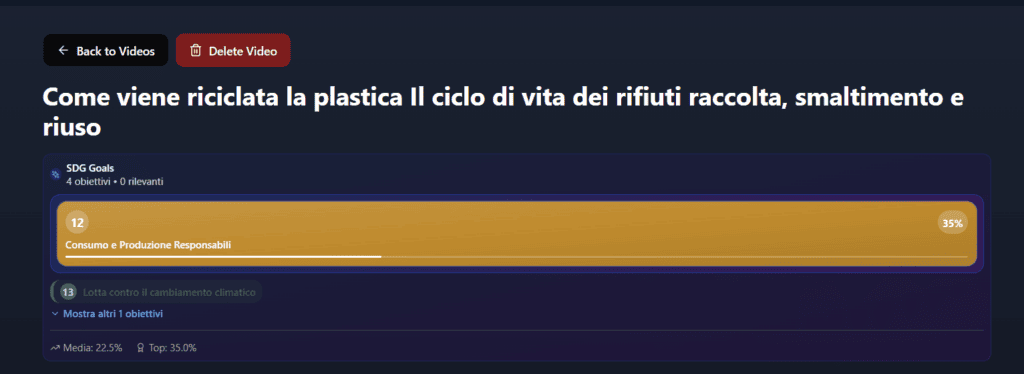

The goal was both ambitious and urgent: to develop an intelligent system capable of analyzing video content in real time, not only identifying scenes, objects, and actions, but also assessing their alignment with the UN’s Sustainable Development Goals (SDGs). A new standard for video streaming, rooted in deep learning and driven by responsibility.

Turning Complexity Into Vision

The first challenge was conceptual: how can complex sustainability indicators be translated into something an AI system can interpret? Neodata and UniMe started by mapping the landscape — not just of technologies, but of values. What would it mean for a video to be sustainable? Which elements should the system recognize, and how should it tag them?

This phase focused on aligning technical feasibility with ethical clarity. The teams designed a system that could detect more than just pixels or patterns — it needed to understand context, behavior and interaction. From this analysis, the project’s core functionalities emerged: intelligent tagging, semantic scene description, and dynamic sustainability scoring, all delivered through an open architecture.

Engineering Meaning Through AI

Once the vision was defined, the technical design took shape. Convolutional Neural Networks were selected to extract visual features, while Transformers and Recurrent Neural Networks enabled the system to grasp temporal dynamics across video frames.

But building powerful models was only part of the equation. Neodata focused on creating a robust middleware layer that could handle high-volume streaming input while exposing clean, well-documented APIs. This ensured flexibility and interoperability, setting the stage for a system that is scalable, customizable, and easy to integrate.

From Prototype to Real-World Performance

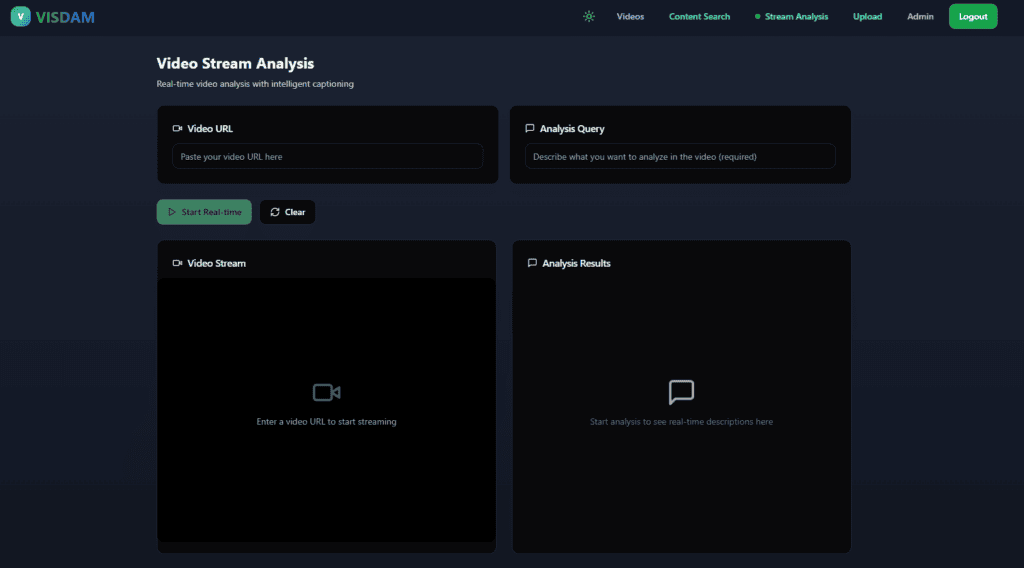

The next big step was implementation. A functional prototype with a user-friendly interface was developed, enabling demonstration of the system’s capabilities in real-world conditions. The AI models were integrated and tested not only for accuracy but also for their ability to evaluate sustainability in live-streaming scenarios.

This wasn’t simply about technical performance — it was about usability. Could the system deliver insight in a way that made sense to both technical and non-technical users? Would the sustainability scores be understandable and actionable? Continuous user feedback guided adjustments to both the algorithmic logic and the interface design.

Refinement, Benchmarking, and Openness

With the prototype in place, the focus shifted to optimization. The system was benchmarked using public datasets to validate its performance against existing video analysis tools. The results confirmed that VISDAM was not only accurate and responsive but also adaptable to complex content and unpredictable real-time conditions.

Documentation played a central role throughout the process. True to the principles of open science and FAIR data, Neodata ensured that the methodologies, findings, and source code would be made publicly available. This culminated in the release of VISDAM’s core components as open source, along with a complete set of APIs — a call to action for the broader AI and media research communities.

A New Standard for Responsible Streaming

The result is a system that brings together three powerful capabilities: semantic understanding, sustainability scoring, and open innovation. VISDAM can describe what is happening in a video, interpret its deeper meaning, and evaluate its social and environmental impact in real time.

This opens up new opportunities for content producers, platforms, and audiences. Brands can align their messaging with verified sustainable content. Publishers can surface stories that reflect ethical values. Viewers can choose media that resonates with their principles. And developers can build upon a solid foundation to create new tools and applications.

The Future of Video Is More Than Visual

VISDAM is just the beginning. Its real-time intelligence and semantic depth unlock new ways of interpreting video content: not only for sustainability, but for a wide range of contexts where meaning matters.

From education to news, from brand safety to cultural heritage, from digital rights to media transparency, the ability to understand and evaluate what flows through our screens opens up extraordinary opportunities. VISDAM offers a technological foundation that is ready to grow, adapt, and extend far beyond its original use case.

Because the challenge ahead is not just to process more video. It is to make it more understandable and meaningful.

Written by Neodata’s Marketing Team — experts in AI, data, and digital transformation.

- Neodata Marketinghttps://neodatagroup.ai/author/adm_dino/

- Neodata Marketinghttps://neodatagroup.ai/author/adm_dino/